Web application penetration testing tutorials Information gathering

This is the second tutorials of Web application penetration testing: Gathering information is a step in which we try to collect information Regarding the goal, we are trying to break.

Information may be open to run ports, services, applications like non-authorized administrative consoles, or Having default passwords.

I want to quote Abraham Lincoln - give me six hours

To cut a tree and I will spend the first four axes sharpening.

In simple words, the more information we gather about the goal, the more it will be It is beneficial for us because the surface of more attacks will be available to us.

Suppose you Want to break into your neighbor's house You will probably observe different locks They use before breaking-in, it will ensure that you can test ways to break That lock is already engaged.

Similarly, when appraising a web app, we need it And more, because to explore all the possibilities of breaking into a web application The information we can gather about the goal, the greater the chance we can distinguish it.

In this, Web application penetration testing tutorials we will cover the following topics:

- Types of information gathering

- Enumerating domains, files, and resources

Information Gathering Techniques

Speaking classically, Information gathering techniques include the following two classes:

- Active techniques

- Passive techniques

Active Information Gathering Techniques

Typically, an Active Information Technique is connecting with our goal to get information.

This can include running port scans, enumerating files, etc. Active Information Gathering Techniques Can be detected by the goal, so it should be taken care to ensure that we do not perform Unnecessary techniques that generate a lot of noise

They can be picked up by The firewall of the target, and can also slow down information to scan for a long time Target down to regular users

Using passive information gathering techniques, we use third-party websites and tools that do not Contact targets for harvesting data for our reconnaissance purposes.

Like websites Shodan and Google can purify a lot of data for a website, you can use these cans right It is extremely beneficial to get the information that can be used later aim.

The best part of the Passive Information Gathering Techniques is the fact that the goal never meets

The sign is that we are actually performing any reconnaissance. Since we are not connected Website, no server logs are generated.

In this section, we will try to use different types of recon techniques

Domain Enumeration Finding a sub-domain of a website can surprise us Places I remember one thing from the Israeli security researcher, Sure Goldslager, in which he Scans a subdomain enumeration on Google service, outside of its group Sub-domains found that one was a publicly run web application Discovered the local file inclusion vulnerability.

Neer then received a shell Google's server Neer's intention was not bad, he told this vulnerability For Google's security team

Let us now learn the techniques of gathering some information. We will be active and use both Passive methods.

The following Web Application Penetration Testing Tools will be discussed:

The following websites will be used for Passive Enumeration:

But sadly, one of the two nameservers allowed zone transfers. The fierce then used brutal force to find the miscreants.

In this advanced search, we used ext: PDF instructions to get only PDF extensions and files ending with the site: packtpub.com ensures that the domain we want to restrict our result to pack pub Should be .com

Passive Information Gathering Techniques

Using passive information gathering techniques, we use third-party websites and tools that do not Contact targets for harvesting data for our reconnaissance purposes.

Like websites Shodan and Google can purify a lot of data for a website, you can use these cans right It is extremely beneficial to get the information that can be used later aim.

The best part of the Passive Information Gathering Techniques is the fact that the goal never meets

The sign is that we are actually performing any reconnaissance. Since we are not connected Website, no server logs are generated.

Enumerating Domains, Files, and Resources

In this section, we will try to use different types of recon techniques

Domain Enumeration Finding a sub-domain of a website can surprise us Places I remember one thing from the Israeli security researcher, Sure Goldslager, in which he Scans a subdomain enumeration on Google service, outside of its group Sub-domains found that one was a publicly run web application Discovered the local file inclusion vulnerability.

Neer then received a shell Google's server Neer's intention was not bad, he told this vulnerability For Google's security team

Let us now learn the techniques of gathering some information. We will be active and use both Passive methods.

The following Web Application Penetration Testing Tools will be discussed:

- Fierce

- theHarvester

- SubBrute

- CeWL – Custom Word List Generator

- DirBuster

- WhatWeb

- Maltego

The following websites will be used for Passive Enumeration:

- Wolfram Alpha

- Shodan

- DNSdumpster

- Reverse IP Lookup using YouGetSignal

- Pentest-Tools

- Google Advanced Search

Fierce

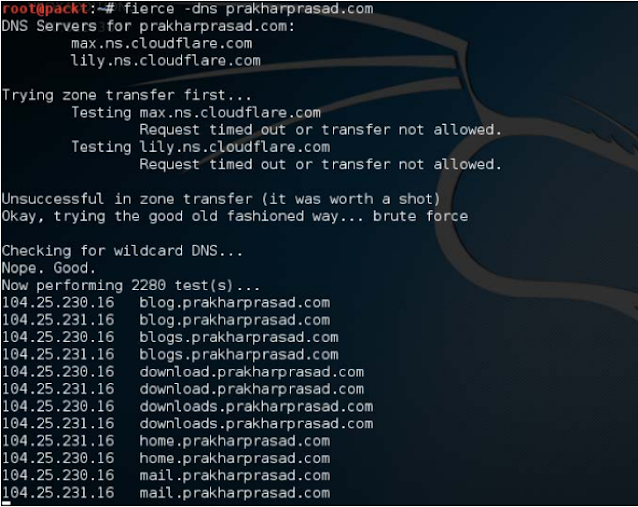

Fierce is an open source active resource tool that enumerates all the domains of a target Website.

This tool was written by Robert (RSnake) Hansen and is already installed By default in Kali Linux

Strong Pearl Script implements techniques like Zone Transfer and Wordlist The animal to search subdomains of the target domain

fierce -dns target.com

Let's do terrible actions against ikk.as.in and see how it performs. It is shown in the following screenshots:

Voila, Fierce presented us with a list of subdomains. One thing to note is that the fierce Calculate the name servers of iitk.ac.in, and then tried to transfer the zones.

Fortunately, one of the names servers was incorrectly placed, and then horribly A grabbed List of DNS entries, including subdomains from incorrect entries.

Fortunately, one of the names servers was incorrectly placed, and then horribly A grabbed List of DNS entries, including subdomains from incorrect entries.

We can also use a tool to dig, which is also available in * nix system, for display zone transfer without using the zone Order to use zone transfer Excavation is like this:

dig @ <name-server-of-target> <target-host-or-address> axfr

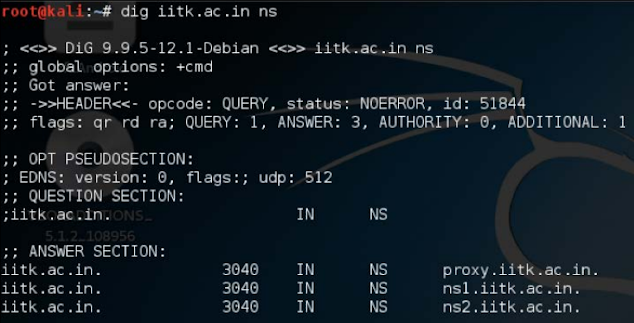

For example, we do the same for iitk.as.in using excavation:

dig @ ns.iitk.as.in iitk.as.in axfr

As expected, we obtain a list of domains by using a digging a zone transfer.

you can do it Curious to know how to view the target website's nameserver In the final example, a similar supply to dig.

We can use the nslookup utility, or In fact, diggers themselves to see the diggers. Command to view name server Goes through excavation:

you can do it Curious to know how to view the target website's nameserver In the final example, a similar supply to dig.

We can use the nslookup utility, or In fact, diggers themselves to see the diggers. Command to view name server Goes through excavation:

dig <target-host> ns

In the last example, to find the name of the target server, we can use:

dig iitk.ac.in ns

By running this command we can list the nameserver and then by using the name server one can try to transfer the zone one by one and get a list of domains:

We get a list of target names. Although digging on time is very useful, but always using a Fair is a good idea as it automates the whole process.

Now let me tell you that there are very few cases where zone-transfer mechanisms are considered wrong.

As you can see, fierce, as usual, domain names associated with the domain participated to find - prokharprasad.com.

But sadly, one of the two nameservers allowed zone transfers. The fierce then used brutal force to find the miscreants.

By default, the Fiers subdomain uses its wordlist for brute-forcing. We can use the word list switch and supply your own word list to estimate the subdomain using Fierce.

Let's create a custom wordlist with the following keywords:

- download

- Sandbox

- Password

- hidden

- random

- test

Now we will run fiercely with this custom word list.

Now we can see a new subdomain that matches one of the keywords in any word list.

Therefore it is clear that a good wordlist gives a good set of subdomains.

Therefore it is clear that a good wordlist gives a good set of subdomains.

By increasing the thread count, the performance of fiers can be greatly increased. To do all this, we have to manipulate the -read switch.

theHarvester

TheHarvester is an open-source reconnaissance tool, it can dig out a heap of information, including sub-domains, email addresses, employee's names, open ports, and so on.

TheHvvester also uses passive techniques and sometimes active techniques.

TheHvvester also uses passive techniques and sometimes active techniques.

Let's run this amazing tool against your homepage:

theharvester -d prakharprasad.com -d google

Look at it! TheHarvester found a list of sub-domains and an email address.

We may use this email address to exploit client-side or phishing, but this is an important topic. To reveal this information, the tool only used Google as a source of data.

We can control the source of data with the awauster using the -b switch. Sources of data that support hairdoors:

google, googleCSE, bing, bingapi, pgp, linkedin, google-profiles,

people123, jigsaw,twitter, googleplus, all

Let's try running a web server on our domain and provide a data source as LinkedIn. Let's see what happens next:

Then the LinkedIn names that are related to the domain are displayed by this tool. There are other command-line switches as well as to fiddle. Halverstars also exists in Black Linux as a default tool

SubBrute

SubBrute is an open-source subdomain enumeration tool. It is community maintained and its purpose is the fastest and most accurate domain search tool. It uses open DNS resolver to circumvent tariff restrictions.

It does not get preinstalled with black Linux and should be downloaded from https: //github/TheRook / SubBritten:

./subbrute.pyarget.com

Let's run SubBritt against PacktPub's website and see what results it offers:

You can see the list of netting sub-domains. This tool partially uses an open DNS resolver to make the process something inconvenient. We have to use the -r switch to supply our own custom resolvers list.

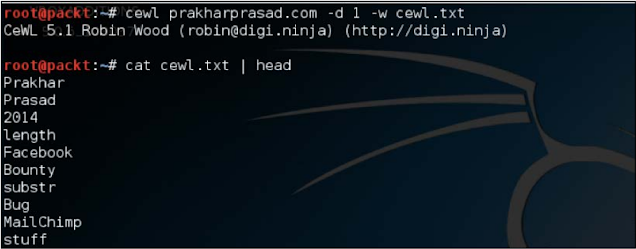

CeWL

CeWL is a custom wordlist generator created by Robin Hood. It basically spreads the target site to a certain depth and then gives a list of words.

This word list can later be used as a dictionary for the broking of web application login, for example, an administrative portal.

This word list can later be used as a dictionary for the broking of web application login, for example, an administrative portal.

CeWL exists in black Linux but we can download it

./cewl target.com

Let me run this tool on my homepage with a link depth count of 1.

look at that! It gave us a good looking wordlist based on the scraped data from the website. CeWL also supports HTTP Basic Authentication and provides the option of proxying traffic.

More options can be filtered by looking at its help switch --help. Rather than displaying the wordlist output on the console, we can save it to a file using the -w switch.

More options can be filtered by looking at its help switch --help. Rather than displaying the wordlist output on the console, we can save it to a file using the -w switch.

You can clearly see that the generated word list was written in the cewl.txt file.

There is also a -v switch to increase the verbosity of CeWL output, it is very useful when the spider on the site is voluntary and we want to know what is below.

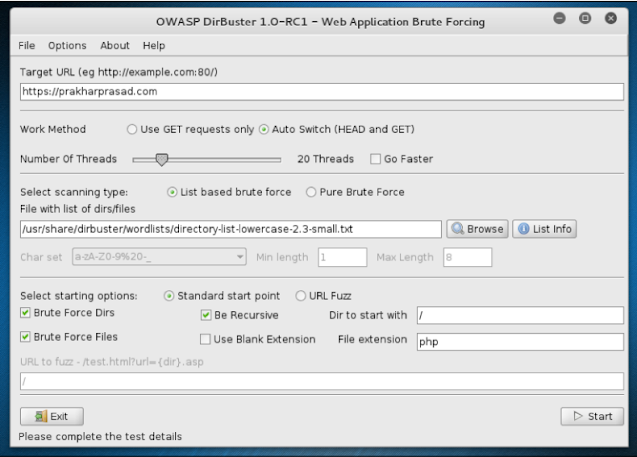

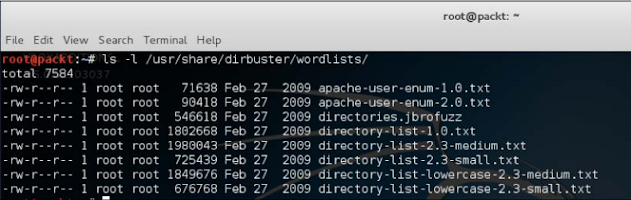

DirBuster

DirBuster is a file/directory brute-forcer. It has been written in Java and programmed by members of the OWASP community.

It is a GUI application and comes with black Linux. Derbuster supports multithreading and is capable of target brute-forcing on the target of the crazy SIP.

It is a GUI application and comes with black Linux. Derbuster supports multithreading and is capable of target brute-forcing on the target of the crazy SIP.

The Dirbuster Project: https://www.owasp.org/index.php/Category:OWASP_DirBuster_Project

The GUI of this tool is straightforward because it provides tons of options for brute-forcing.

It can go up to 100 threads, which is surprisingly fast, provided the proper bandwidth is supplied.

It comes with a set of words for different requirements and conditions.

Let us run DirBuster against files/folders to look around:

It found some directories and files and although some fluctuation was positive, not all results are wrong.

One thing Dobster should use, it is that it generates much traffic which can easily slow down small websites, so threads should be set correctly to avoid the goal being taken down.

Dirbuster gives lots of flas-positive, so it attempts for every directory or file, we have to go manually and verify it.

One thing Dobster should use, it is that it generates much traffic which can easily slow down small websites, so threads should be set correctly to avoid the goal being taken down.

Dirbuster gives lots of flas-positive, so it attempts for every directory or file, we have to go manually and verify it.

For those who want to use a more polished command-line version, you can try wfuzz. This is more feature-rich, advanced, and versatile than DirBuster.

WhatWeb

To get basic information about a website, we can use whatwab, which is an active restructuring tool.

WhatWeb listed cookies, country, and unusual headers

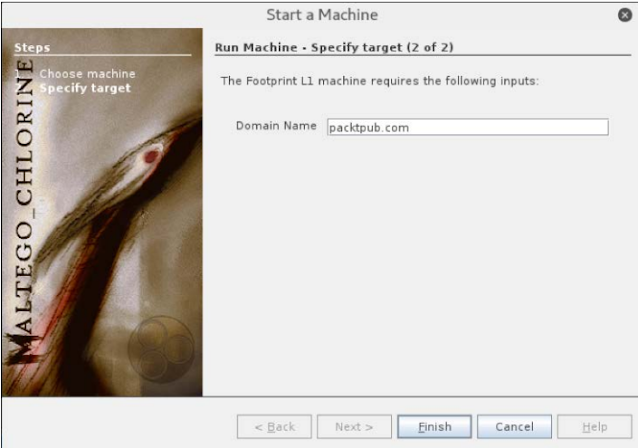

Maltego

Malta is an open-source intelligence (OSINT) tool developed by Patva. This is a commercial tool, though the community version comes with default. Black Linux We will use community editions for this demographic.

Maltego can be launched from the information gathering section of Kali Linux's Application menu.

During the first launch, Maltego will ask you to register for a Community Edition license or to log in directly before it is registered. This stop should be done to reach and run Maltago.

During the first launch, Maltego will ask you to register for a Community Edition license or to log in directly before it is registered. This stop should be done to reach and run Maltago.

After the completion of basic formalities, we can run Malta again and we will have an outrage with a dialogue, which will give us the option to run the machine.

Machines are different categories or are the genres for collecting information that we are interested in.

Machines are different categories or are the genres for collecting information that we are interested in.

In the dialogue, we are presented with different machines or information collected categories. Footprinting machines differ from L1 to L3 at different levels.

L1 is the fastest and L3 is the slowest, although L3 gives better results at the expense of time.

Now let us go ahead and mark an L1 on our goal. After selecting Footprinting L1 and hit the next button, we will present a dialogue similar to the following which will ask for the name of the domain.

In our case, we will write packtpub.com and hit the Finnish button.

In our case, we will write packtpub.com and hit the Finnish button.

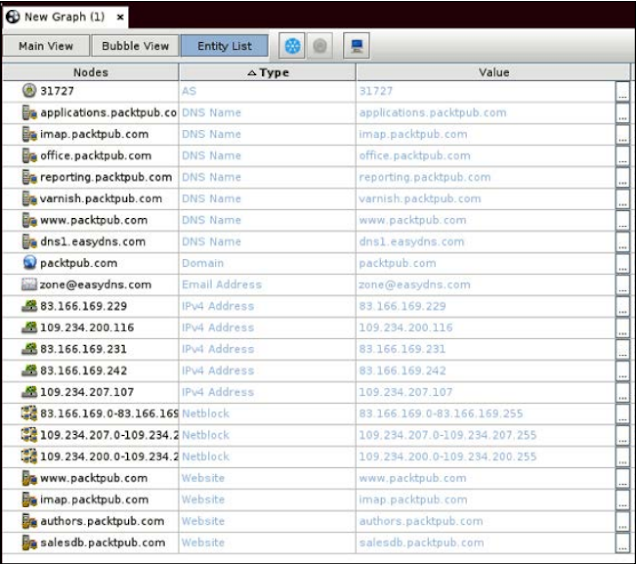

Now we show a graph for our goal, in which the default domain, server, email, etc. are shown.

To get a tabbed view, we can select the entity list and all the information will be shown in the form of a table like this:

Malta is more feature-rich than I have shown. I would recommend that the reader plays with Malta for the full potential.

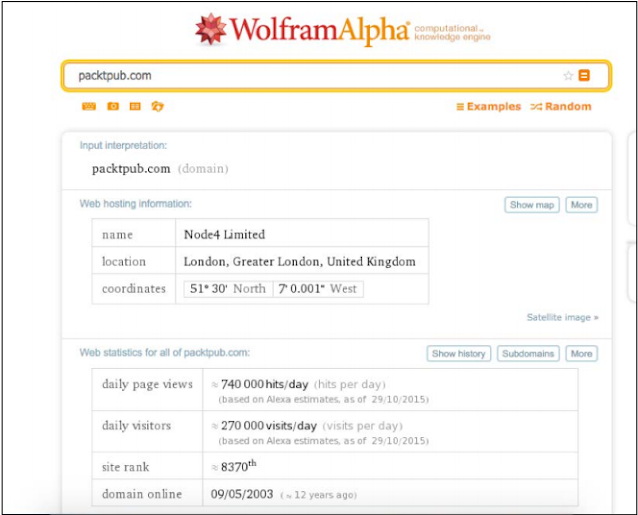

Wolfram Alpha

Wolfram Alpha is a knowledge engine that provides services like computation, analysis, and using the AI-based search interface.

A key feature of the safety examiner's approach is that Wolfram provides a list of sub-domains for each registered website.

A key feature of the safety examiner's approach is that Wolfram provides a list of sub-domains for each registered website.

We will try to enumerate some subdomains of packtpub.com from the Wolfgram website.

If we press the sub-domain button, we will be presented with a shiny list of the sub-domains of packtpub.com, as shown in the imagery, which is as follows:

They are here! It also presents us with a daily sub-domain per sub-domain. It can be used in search of isolated or rarely contacted sub-domains, which are a result of statistical weaknesses because they are mostly staging or testing systems.

Shodan

I start, I must say that Shodan is a kind of search engine. In their own words, it is the world's first computer search engine, which is often termed as a search engine for hackers. We can use Shodan to find different types of informants about a goal.

Let's search through a Shodon on version 8.0 which is running Microsoft IIS on a web server:

Shodan presented us with one-page entry entries in our database. Shodan provides a very decent and useful method that filters our results by the following criteria:

- Top countries

- Top Services

- Top organization

- Top operating system

- Top products

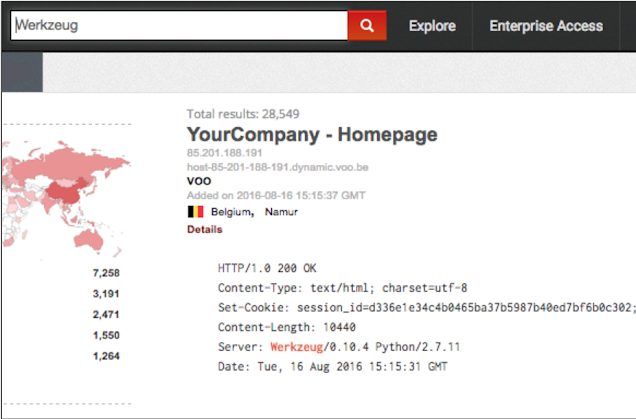

Recently there was a publicly disclosed code execution flow inside the Python-based debugger, which was known as the Verkageg's Debugger. We can give Shodan a shot and find out the computers running Werkzeug:

there we go! There is a list of computers running the weak debugger.

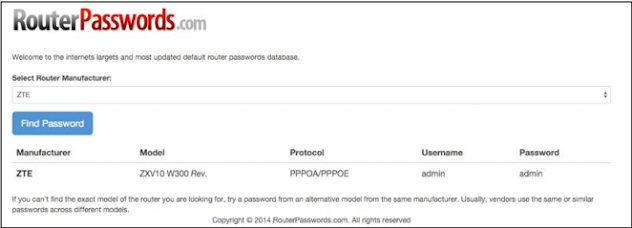

Now let's learn about some ZTE OX253X. This special brand of router is widely used by BSNL in India to provide WiMAX services.

The list contains IP addresses that are running the special router that we had asked for.

Although those passwords are protected, we can try the default login credentials and the router allows us most incorrectly from the list.

I would recommend the website http://www.routerpasswords.com/ to see the default login credentials for a particular brand. And the model of a router:

I would recommend the website http://www.routerpasswords.com/ to see the default login credentials for a particular brand. And the model of a router:

DNSdumpster

DNSdummster (https://dnsdumpster.com/) has yet another disabled subdomain enumeration. I will run the search for the packtpub.com website and display it:

Here, the DNSdumpster displays the subdomain of packtpub.com.

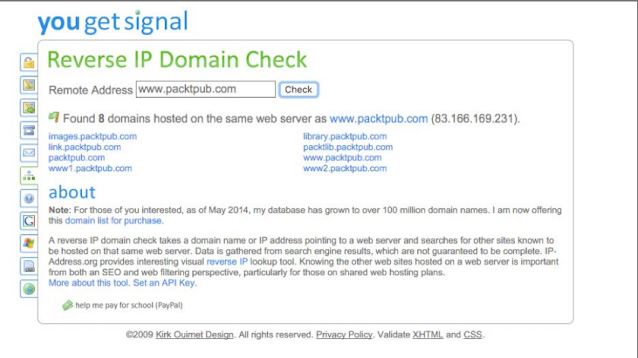

Reverse IP Lookup - YouGetSignal

YouGetSignal (http://www.yougetsignal.com/) is a website that offers a reverse IP lookup feature. In the common man's terms, the website will try to get the IP address for each hostname entered and then a reverse IP lookup will be done on it, so it will search for other hostnames that are related to that particular IP.

A classic situation occurs when the website is hosted, does a reverse lookup on a shared server. If we had work to penetrate a website, then try to break into other sites.

Then we can increase the privilege to enter the targeted website hosted on the same server.

A classic situation occurs when the website is hosted, does a reverse lookup on a shared server. If we had work to penetrate a website, then try to break into other sites.

Then we can increase the privilege to enter the targeted website hosted on the same server.

For performance purposes, I will do a reverse IP lookup through YouGetSignal at http://www.packtpub.com/.

YouGetSignal gave us a list of the potential domains hosted on the same server.

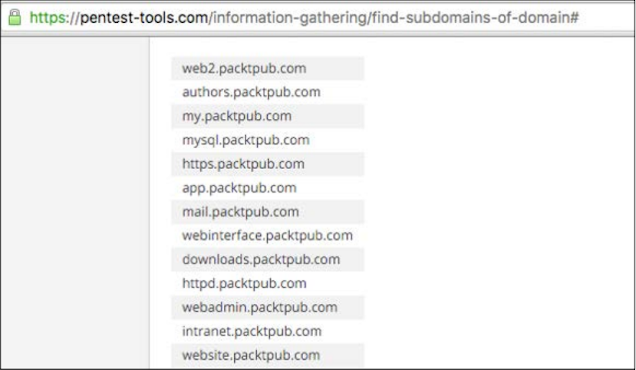

Penetration Testing Tools

Pentest-Tools (https://pentest-tools.com/home) provides a good set of web-based tools for inactive information gathering, web application testing and network testing.

In this section, I will cover the information gathering device to find only the sub-domain.

In this section, I will cover the information gathering device to find only the sub-domain.

We will always hit packtpub.com on the Pentest-Tools website as usual.

The Penthouse Tools is a tool similar to that of YouGetGignal, which is called VHosts, which claims to find sites that share the same IP address. You can check yourself.

Google Advanced Search

We may use Google for the purposes of collecting passive information. This method is inactive, the target site does not know about our reconnaissance. Google search engine provides a decent set of special instructions to refine search results tailored to our needs. Instructions are in the following format:

directive:query

directive:query

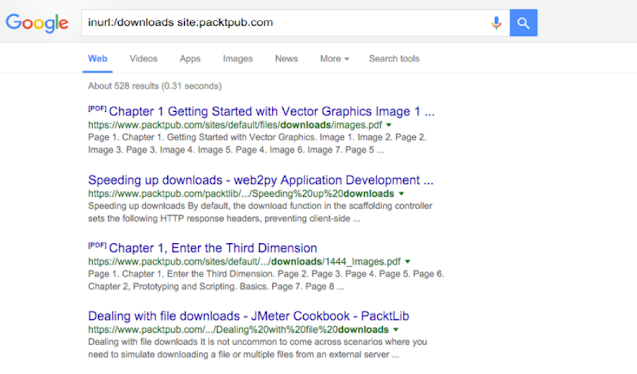

These instructions can be very useful for finding juicy resources for the goal. As an example, let's do an advanced Google search on packtpub.com, which will list all indexed PDF files:

ext:pdf site:packtpub.com

ext:pdf site:packtpub.com

In this advanced search, we used ext: PDF instructions to get only PDF extensions and files ending with the site: packtpub.com ensures that the domain we want to restrict our result to pack pub Should be .com

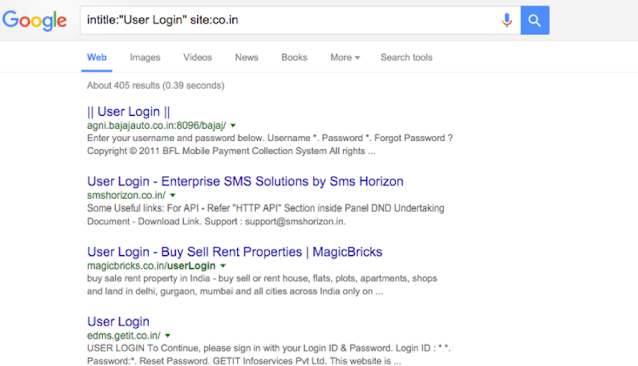

If we want to match a particular path to the website URL, then we can use the inurl command:

To see a special title in the results, we can use the Intitle command:

look at that! We are using a simple title search on the user login keywords for all the co.in domains and we have found results with the user login panel of many websites.

Now let's add some advanced search instructions together and see how the result is. We will add teeth, extras, and site instructions to detect publicly available database dumps for websites:

backup.sql intext: "SELECT" ext: sql site: net

This search query means that we are looking for backup.sql anywhere in the results, but the content of the result should be the selection of keywords, the extension will be SQL, and we want results from only .net top-level domains.

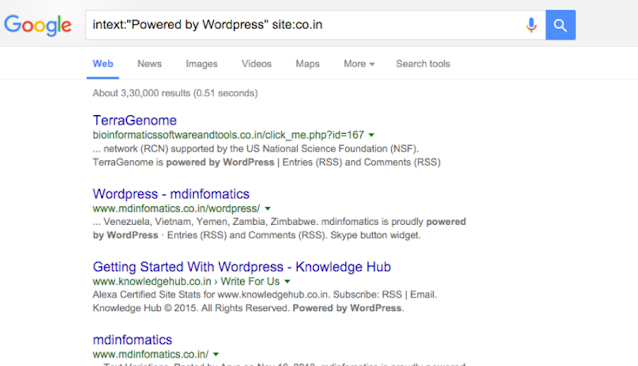

We can search for software by searching Google for its special signature, for example, most WordPress websites have a footer that says - powered WordPress. We can use this type of pattern and change your search queries accordingly.

Showing Google search results here are sites that run WordPress blogging software.

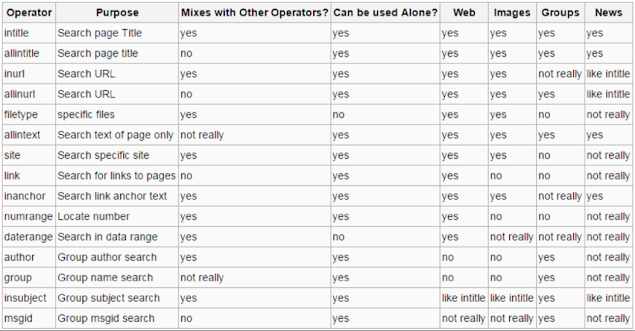

The following table shows a list of widely used Google search operators.

Summary

In this Web application penetration testing tutorial, We learned about collecting information, which is one of the foundations for piercing a web application.

With the practice of time and hands, the stage of collecting information will be greatly improved. A proper abuse of booths active and inactive methods can be very easy.

With the practice of time and hands, the stage of collecting information will be greatly improved. A proper abuse of booths active and inactive methods can be very easy.

Google Advanced Search Techniques are surprisingly powerful. When testing web applications, monitoring HTTP response headers is a good practice. It often helps to know more about web applications and their components.

In the next web application penetration testing tutorial, we will go through cross-site scripting and various techniques related to it. XSS enables us to execute client-side code inside the browser and there are some dirty repercussions in it.

Related:

Related:

- Web application penetration testing tutorials Common security protocols

- OWASP tutorials The owasp testing framework

- Burp suite tutorials Getting started with burp

- How to do penetration testing?

.png)

This is reasonable enough as we are not all conversant in more than one language but rather following this technique can lose you a huge amount of traffic from nations outside your online journals socioeconomics. neuronto deepl wordpress plugin

ReplyDeleteGreat article. I learned lot of things. Thanks for sharing.

ReplyDeletesplit pdf file into multiple files online